Data Engineer Career Guide: Trends, Tools, and Future Prospects for 2024

Numerous articles highlighted that Data Engineer is among the most sought-after IT jobs in 2024. According to Andrei Neagoie, a prominent software developer, instructor, and writer in the tech industry, Data Wrangler (or Data Engineer) is one of the top 5 in-demand tech jobs for 2024.

Sources like Free-Work and Hays also emphasize the high demand for Data Engineers. Free-Work, a leading French job board with over half a million monthly visits, lists Data Engineer among the top 10 in-demand tech jobs for 2024. Hays, a global leader in workforce solutions and one of the largest staffing firms in the U.S. concurs with this assessment.

You might be wondering about the future impact of AI developments on the demand for Data Engineers. Rest assured, data and projections from the U.S. Bureau of Labor Statistics indicate that the employment of Database Administrators and Architects (including Data Engineers) is expected to grow by 8 percent from 2022 to 2032, faster than the average for all occupations.

Data Engineer Salary

According to ZipRecruiter, as of Jun 30, 2024, the average annual pay for a Data Engineer in the United States is $129,716 a year. Just in case you need a simple salary calculator, that works out to be approximately $62.36 an hour. This is the equivalent of $2,494/week or $10,809/month. According to Glassdoor, the estimated average salary per year for a Data Engineer is $110,000 in Australia, ₹950,000 in India, and €65,000 in Germany.

What Really is Data Engineer and They Do

I believe you got so many definitions of Data Engineer from the Internet. To summarize, in essence, a Data Engineer is a person whose job is to prepare data from raw to ready for use by other parties such as Data Analysts and Machine Learning Engineers.

Easy isn’t it?

But wait, look at this picture:

One of the problems is so many tools. Data Engineers must choose what most efficient tools for process and serving the data. Other tasks are developing data pipelines (or ETL pipelines) and ensuring that data is high quality.

Data Engineer Position in The Data Field

Are you confused enough about to difference between Data Engineer, Data Analyst, Machine Learning Engineer, and Data Scientist?

I think a picture from Raj Gandhi can make it clear.

This image can explain the differences as well as show the sequence of data flow.

The data engineer is tasked with preparing the data, then the data is used by the data analyst to be analyzed, visualized, and presented. There is a further action, namely creating a data model for predictions by machine learning engineers. These three are included in Data Science, and a Data Scientist must be able to do end-to-end, I think.

Real Skills for Data Engineers

Foundation Skills

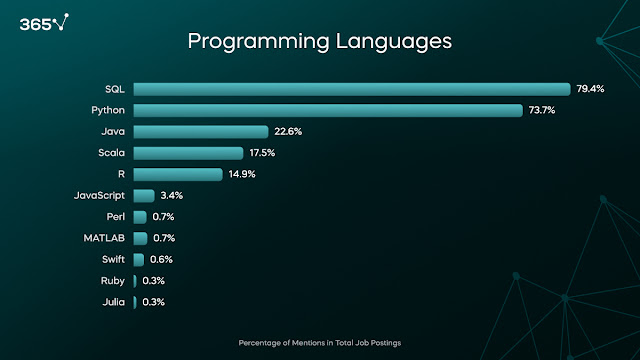

- SQL (79.4%): SQL is a programming language for databases. SLQ is important for interacting with relational databases, querying data, and managing data stored in structured formats.

- Python (73.7%): Python is a programming language that is often used for data science. This is due to its comprehensive library, and flexibility in data manipulation, processing, and machine learning.

Slightly Above Foundational Skills

There are so many tools and technology available but you must focus on what most companies use

- Data Warehouse. A Data Warehouse is a system that collects, stores, and manages data from various sources in a central repository, enabling the analysis of historical data. And learning about important tools that are available in the market: Snowflake, BigQuery, Redshift, and Synapse Analytics

- Data Processing. Data Processing is a series of operations on data, especially by a computer, to retrieve, transform, or classify information. There are two main frameworks: Batch processing and Real-time processing.

- Batch processing involves handling data in large chunks, such as processing the data from the previous month once or twice a day. Most companies use Apache Spark for batch processing. It is an open-source framework designed for efficient data processing.

- Real-time processing involves handling data as it arrives, in real-time. Frameworks and tools like Apache Kafka, Apache Flink, and Apache Storm are commonly used for real-time processing. You can choose one of these tools to learn more about.

- Workflow Orchestration. Workflow Orchestration is a process for automating and managing complex processes. It enables teams to quickly create, deploy, and monitor tasks that are too complex or inefficient for engineers to do ad-hoc. One of them is Apache Airflow.

- Cloud Computing. Cloud Computing is the practice of using a network of remote servers hosted on the internet to store, manage, and process data, rather than a local server or a personal computer. These are the 3 top cloud providers AWS, Azure, and GCP.

Advanced Skills (Examples):

1. Modern Data Stack

A modern data stack is a group of cloud-based tools that help collect, process, store, and analyze large amounts of data. These tools make it easier and faster for businesses to use data for making decisions. Cloud data warehouses, which can quickly handle big data with SQL support, have made this process more affordable. This has led to the development of easy-to-use, scalable, and cost-effective tools, all working together as the Modern Data Stack (MDS).

The emergence of cloud computing and cloud data warehousing has driven the growth of the modern data stack, moving from ETL to ELT workflows. This change enables better connectivity and flexible use of various data services through cloud-based integration. The modern data stack, which has gained popularity since the early 2010s with cloud data warehouses such as BigQuery, Redshift, and Snowflake, supports agile analysis and flexibility in data management. Complementary tools such as Chartio, Looker, Tableau, Stitch, Fivetran, MongoDB, Cassandra, and Elasticsearch further enhance the capabilities and integration of big data solutions.

A modern data stack is easier to use, more flexible, and better at handling big data than older systems, as it uses cloud technology that makes scaling and management simple. Older data stacks rely on physical servers and traditional databases that need complex setups and lots of maintenance, making them slower to adapt and more technical to manage. In contrast, modern data stacks are cloud-based, reducing costs and allowing even non-technical users to easily access and work with data. This modern approach enables businesses to quickly analyze data and make decisions without requiring deep technical expertise.

2. DevOps and CI/CD

Combining DevOps and Data Engineering makes managing data more efficient and flexible. DevOps focuses on automation and collaboration in software development, while data engineering handles data pipelines and storage. Together, they automate data processes, reduce errors, and allow quick responses to business needs.

Benefits:

- Automated Data Pipelines: Less manual work and fewer errors.

- CI/CD Practices: Faster testing and deployment, improving data quality.

- Collaboration: Better teamwork between data engineers and data scientists.

Essential tools:

- Containers: Docker and Kubernetes.

- CI/CD: Jenkins, GitLab, CircleCI.

- Monitoring: Prometheus, Grafana, ELK Stack.

Whether you're just starting your journey or looking to advance your career, understanding these trends and acquiring the right skills will set you on a path towards success in the data-driven world of tomorrow.

reference:

https://zerotomastery.io/blog/tech-careers-in-high-demand/

https://365datascience.com/career-advice/data-engineer-job-market/

Comments

Post a Comment